Home > The crucial role of Apache Kafka and Hadoop in Data Engineering

Feeling overwhelmed by an endless stream of data without making sense of it? This article shows how Apache Kafka and Hadoop, two Big Data giants, work together to streamline your data management and boost processing power. Discover how these tools are redefining data infrastructure and powering large-scale applications!

In the world of distributed systems, Apache Kafka and Hadoop form a powerful duo for enterprises. Kafka excels in real-time data streaming, while Hadoop shines in batch processing. But how do they work together day to day?

Here are their key technical complementarities:

This technical symbiosis addresses the challenges faced by modern companies managing both continuous data streams and large historical datasets.

Their distributed operation relies on elastic clusters. But how does it work in practice? Let’s take the example of a social network: Kafka ingests every user interaction in real time, while Hadoop stores the full history for weekly analysis.

A typical case? Application monitoring. Logs are streamed via Kafka in real time, allowing for instant incident detection. At the same time, Hadoop gathers this information for monthly reports. To master these technologies, check out our courses on the fundamentals of Apache Kafka and the introduction to Hadoop.

It’s worth noting that companies using Kafka often pair it with large-scale storage systems like Hadoop.

*Source: Data Platforms Study 2023

Wondering which big data technology to choose for your data projects? Let’s analyze Kafka and Hadoop with a practical look at real-world applications.

| Feature | Kafka | Hadoop |

|---|---|---|

| Processing | Real-time (Streaming) | Batch |

| Latency | Low | High (tolerates I/O latency) |

| Fault tolerance | High (partition replication) | High (HDFS block replication) |

| Main use case | Ingestion and real-time data stream processing | Storage and processing of large datasets |

| Architecture | Distributed streaming platform | Distributed storage and processing framework |

| Legend: This table compares Kafka and Hadoop across key aspects such as processing, latency, fault tolerance, and use cases. | ||

To get a clearer picture, let’s break down the specifics of each solution:

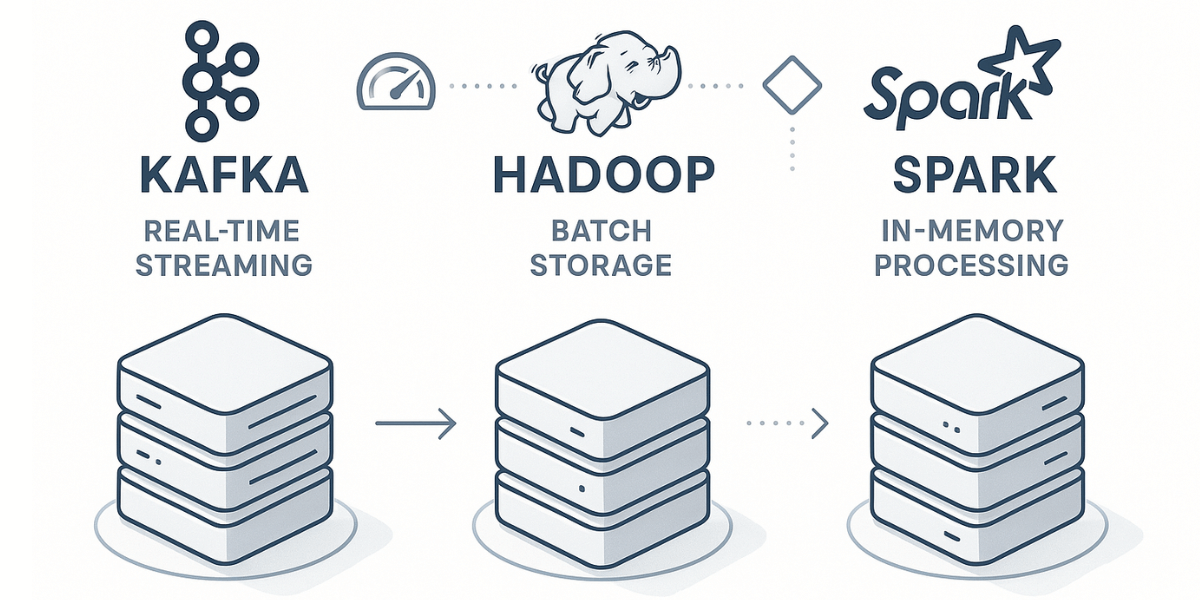

In practice, modern ETL pipelines often blend these tools. Kafka acts as a responsive buffer for streams, Spark speeds up transformations, while Hadoop clusters persist some data. But how do you orchestrate this complex machinery?

With the rise of the cloud, services like Azure HDInsight make it easier to deploy these platforms. Serverless capabilities allow Kafka clusters to auto-scale based on workload—perfect for businesses with fluctuating needs.

On the security side, best practices are evolving. Encrypting Kafka streams (via TLS) and fine-grained access management in Hadoop remain essential. Regulated companies often add centralized logging layers to audit data sources.

It’s also worth noting that integration with other components (such as NoSQL databases or BI tools) influences the technology choice. A well-designed platform should allow smooth communication between all these elements, without creating bottlenecks.

The combination of Kafka and Apache clusters is transforming multiple industries. Let’s look at how these technologies are being applied in the field, with real-world examples.

In finance, companies combine Kafka with cloud platforms to detect fraud. The system captures live transaction streams, while Apache clusters cross-reference this data with historical sources.

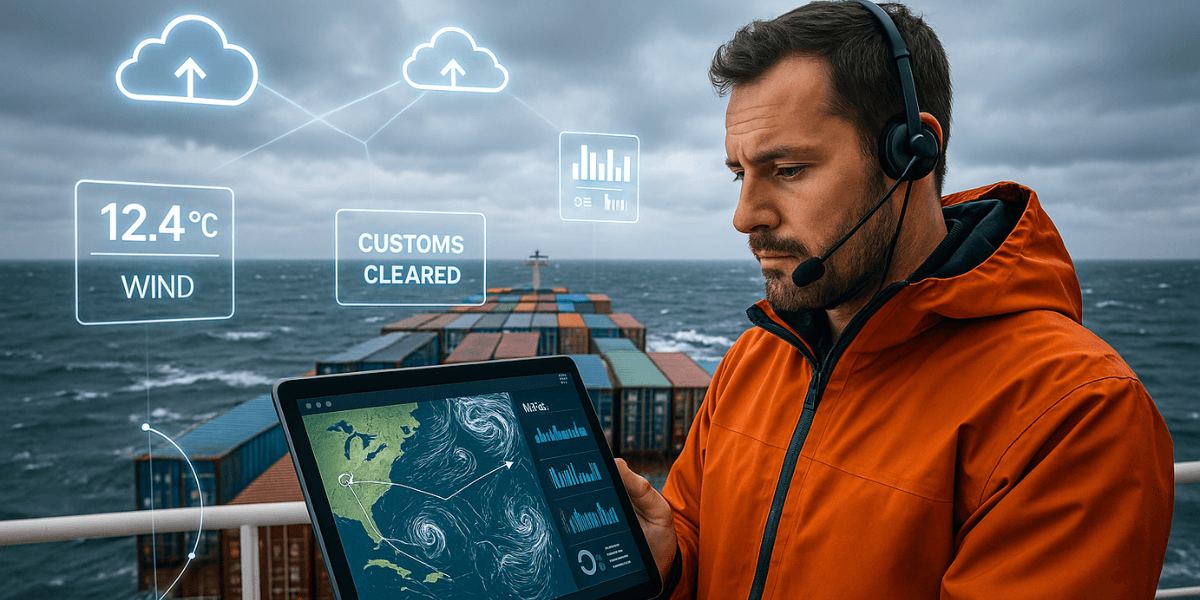

Maritime transport also showcases powerful use cases. Thanks to IoT streams processed by Kafka, logistics companies optimize their routes in real time. Scalable architectures merge weather data, GPS positions, and customs constraints.

Retail is another sector leveraging these tools to personalize promotions. Customer behavior streams flow through Kafka, while clusters analyze trends across petabytes of data. The result: highly targeted marketing campaigns without compromising privacy.

To grow your expertise in data engineering with Kafka and Hadoop, solid training is essential. Apache certifications and those from major cloud providers (AWS, GCP, Azure) are real assets for professionals. Let’s look at how to structure your learning journey. Where to begin?

The ideal path? Alternate between hands-on lab work and online courses. Master the fundamentals before diving into complex architectures. Employers particularly value this mix of theory and practice. Pro tip: always document your experiments!

Here’s a proven method to master Kafka and big data platforms:

This step-by-step approach will help you develop in-demand skills.

For testing, prioritize sandboxes (Cloudera, Hortonworks) and local simulators. These isolated environments are perfect for exploring architectures safely. Tip: always start with a minimalist cluster before scaling up.

The key? A rigorous setup for your POCs. Document every parameter and test your apps under different loads.

Here are the essential tools for experimentation:

These best practices will help you master distributed processing platforms.

Apache Kafka platforms are rapidly evolving in cloud architectures. Let’s take a look at what lies ahead for these data clusters.

Integration with Machine Learning in production is gaining traction. Apache Kafka is increasingly used to feed ML models within clusters—both via streaming and batch processing. A major step forward for real-time prediction delivery. But beware: what about the specific needs of batch applications?

On the infrastructure side, containers are redefining deployments. Kubernetes simplifies elastic cluster management, especially for high-frequency streams. How can these solutions be adapted to hybrid cloud architectures?

Source traceability is becoming critical in organizations. Structured metadata now makes it possible to track the origin of streams while ensuring data quality. A key aspect of distributed clusters!

The GDPR challenge remains in decentralized architectures. Companies must secure sensitive data streams while ensuring cross-system distribution. The good news: platforms like Apache Kafka now offer native encryption features.

With exploding data volumes, businesses must balance performance with budget. TCO models now account for the hidden costs of oversized clusters. It’s a complex equation—especially for real-time streaming.

Smart compression and tiered archiving are emerging as solutions. In parallel, query optimization on batch sources helps reduce hardware footprint. The result?

You probably know this already: mastering Kafka and Hadoop is essential to excel in data engineering. Combined with Spark, these technologies multiply your ability to process massive datasets. A winning trio to handle large-scale data streams! So, ready to level up your Big Data skills and shape the architectures of tomorrow?

How can I optimize Kafka and Hadoop for variable-rate IoT data?

Adjust Kafka (partitions, compression, batch size) to match the throughput. Kafka Connect helps integrate with sensors. Use real-time monitoring (Prometheus, Grafana) to dynamically allocate resources. On the Hadoop side, YARN handles scaling during ingestion spikes.

How do I integrate Kafka and Hadoop with a DLP solution?

Implement DLP rules in Kafka Consumers or use an external DLP tool. In Hadoop, encrypt data, apply access controls (RBAC), and anonymize sensitive fields. Use DLP APIs to centralize rule and alert management.

What are open source alternatives to Spark in a Kafka/Hadoop ecosystem?

Apache Flink is ideal for real-time stream processing. Storm is lightweight for simple events. Apache Beam supports multi-engine pipelines (Spark, Flink). For batch jobs, MapReduce is still usable. Dask is a Python-based alternative for distributed computing.

How can I ensure disaster recovery for Kafka and Hadoop in a hybrid cloud?

Use MirrorMaker 2 to replicate Kafka and HDFS replication for Hadoop. Automate failover, traffic redirection, and service recovery. Tools like BDR or cloud snapshots can strengthen resilience.

What architecture patterns should I use with Kafka and Hadoop in microservices?

Several patterns are suitable:

ITTA is the leader in IT training and project management solutions and services in French-speaking Switzerland.

Our latest posts

Subscribe to the newsletter

Consult our confirmed trainings and sessions

Nous utilisons des cookies afin de vous garantir une expérience de navigation fluide, agréable et entièrement sécurisée sur notre site. Ces cookies nous permettent d’analyser et d’améliorer nos services en continu, afin de mieux répondre à vos attentes.

Monday to Friday

8:30 AM to 6:00 PM

Tel. 058 307 73 00

ITTA

Route des jeunes 35

1227 Carouge, Suisse

Monday to Friday, from 8:30 am to 06:00 pm.